How the Ear Works

In this article, we’re going to have a close look at the tool we all use every day: the ear. This small organ has quite a few surprises in store for us. We’ll see that it’s literally crammed with equalizers and dynamic compressors, including a multi‑band one. It even includes an extremely efficient filter bank, as well as a highly sophisticated analogue‑to‑digital converter. Armed with this knowledge, sometimes referred to as ‘psychoacoustics’, we’ll discover numerous practical consequences for music production. Those include the choice of monitoring level, ideas for how to deal with bass frequencies in a mix, and a surprising antidote to frequency overlap.

Note that this article won’t attempt to cover psychoacoustics in its entirety. In particular, we’ll be restricting our focus to monaural audition and setting aside the notion of integration time, which is the audio equivalent of ‘persistence of vision’.

The Recording Studio In Your Head

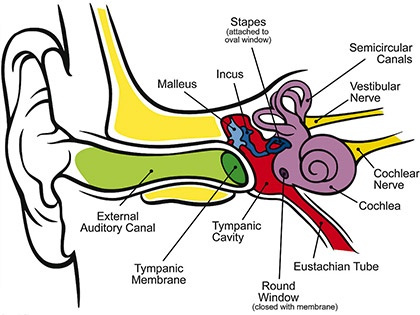

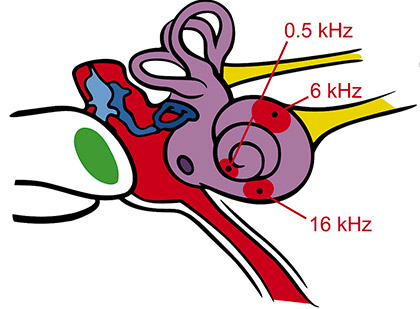

We’ll start our study of the ear by looking at Figure 1. This drawing shows the morphology of the ear, as usually represented. This is divided into three sections. The outer ear consists of the auditory canal and the exterior of the tympanic membrane, better known as the eardrum. The malleus, incus and stapes, which are small bones often referred to as ossicles, belong to the middle ear, along with the interior of the tympanic membrane. Then there is the inner ear, which includes the cochlea and the semicircular canals. Last, we find two nerves that connect the ear to the brain. (The semicircular canals and vestibular nerves don’t relay any information relating to hearing; their purpose is to give us a sense of gravity and balance, so we’ll leave them aside.)

What we call ‘sound’ is in fact a progressive acoustic wave — a series of variations in air pressure, spreading out from whatever source made the sound. When these pressure variations strike the ear, they find their way through the external auditory canal to the tympanic membrane, setting it into vibration. The signal is thus converted to mechanical vibrations in solid matter. These vibrations of the tympanic membrane are transmitted to the ossicles, which in turn transmit them to the cochlea. Here the signal undergoes a second change of nature, being converted into pressure variations within liquid. These are then transformed again by specialized hair cells, which convert the liquid waves into nervous signals.

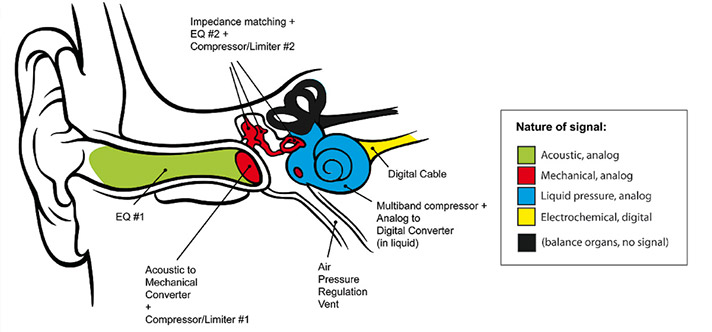

This extraordinary signal path encompasses four distinct states of information: acoustic, mechanical (solid), mechanical (liquid), and electric, more specifically electro‑chemical. The very nature of the information also changes: from analogue, it becomes digital. Really! Whereas mechanical information propagation is analogous to the original sound wave, the nervous signal is even more decorrelated from the sound wave than AES‑EBU audio signals can be. Put simply, the ear includes a built‑in analogue‑to‑digital converter. Figure 2 summarizes these changes of state and nature in the sound information.

The Outer Ear: EQ & Compression

Besides such changes in state and nature, the audio signal is also subject to important changes in content. It is, in short, rather heavily equalized and compressed. Let’s begin with the external auditory canal, which has the shape of a shallow tube. Now, as I explained in a previous SOS article, reverb in a very small space is so short that it’s perceived as EQ, rather than as ambience. The reverberation of the auditory canal boosts frequencies around 3 kHz by 15 to 20 dB.

At this point, the signal is transformed into mechanical vibrations by the eardrum, which also acts as an EQ. To understand why, compare it to an actual drum. Strike a timpani and it will resonate at certain frequencies, which are inversely proportional to the size of the instrument. Strike a snare drum and, being much smaller, it will resonate at higher frequencies. Strike an eardrum, and it resonates at even higher frequencies, thus filtering the input signal correspondingly. The tympanic membrane is also attached to a muscle called the tensor tympani. When confronted with high sound pressure levels, this muscle contracts, heavily damping eardrum movements. It is, in other words, a mechanical compressor/limiter, allowing low‑level vibrations through unaltered, but damping larger vibrations.

The Middle Ear: EQ, Compression & Impedance Matching

Behind the eardrum, we find the ossicles. The purpose of these minute bones is to convert the eardrum vibrations into pressure variations in the cochlear fluid. Now, converting acoustic waves into variations in fluid pressure is no easy matter — look at what happens when you’ve got water in your ears. This means that conversion of acoustic waves from air to water is anything but efficient. Put differently, fluids have a high input impedance when receiving acoustic waves.

The ear’s answer to the problem is simple: give me a lever and I can move the earth! To override this high input impedance, the ossicles form a complex system of levers that drastically increase pressure variations from the eardrum to the entrance of the inner ear. This is made possible physically by the fact that the eardrum is 20 times the size of the cochlear window. It really works as a conventional lever does: low pressure across a wide area is converted into higher pressure on a small area.

Dealing with impedance matching with a system of levers as complex as the ossicles doesn’t come without side‑effects. The ossicles’ frequency response is not flat, turning them into another EQ. In this case, frequency response is decent around 0.5kHz, gets even better near 1-2kHz, and then degrades steadily above this frequency. The ossicles also serve as compressor/limiter, thanks to what’s called the stapedian muscle. Like the tensor tympani in the case of the eardrum, the stapedian muscle stabilizes the ossicles at high levels.

The middle ear also contains the eustachian tube. Now, the purpose of this is simple: seal the opening at the rear of a kick drum, and you suddenly get much less sound! Likewise, if you seal the cavity behind the eardrum, you suddenly have problems hearing properly. This happens regularly; for example, when we’re on an airplane or when we get a cold. In both cases, the eustachian tube gets clogged, and that prevents the tympanic membrane from moving as it should.

The Inner Ear: Multi‑band Compression, Pitch Tracking & ADC

By now, the audio signal has reached the inner ear, and that means the cochlea. This snail‑shaped organ is filled with liquid. Logically enough, it must be waterproof, in order to prevent any fluid leaking. This explains the purpose of the round window, a small, elastic membrane on the surface of the cochlea. Its purpose is to allow movement of the fluid inside the cochlea. Liquids are incompressible, and without this membrane, the fluid enclosed inside the cochlea would completely block the ossicle movements. Indeed, stiffening of the oval window can lead to hearing losses of about 60 dB.

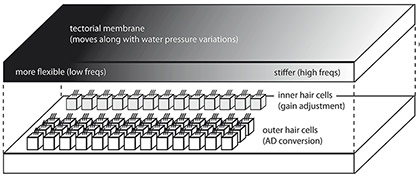

Inside the cochlea we find the tectorial membrane, which moves along with the pressure variations of the cochlear fluid. As shown in Figure 3, this membrane is in contact with the cilia on the top of the hair cells. There are two kinds of hair cells. The outer hair cells are the actual receptors. When the tectorial membrane moves, so does the hair on the outer cells. This movement is then encoded into electrical digital signals and goes to the brain through the cochlear nerve. The inner cells have a different role: when the audio signal gets louder, they stick themselves to the tectorial membrane in order to limit its movements, playing the role of another dynamic compressor.

This tectorial membrane exhibits a clever design. Its stiffness is variable, and decreases gradually towards the center of the ‘snail’. This is a way of tuning the membrane to different frequencies. In order to understand the phenomenon, consider guitar tuning. When you want pitch of a string to be higher, you stretch it so it gets more tense, and stiffer. Generally speaking, stiffer materials are able to vibrate at higher frequencies. This makes the tectorial membrane a bank of filters, with an important result: outer cells are frequency‑specific, each group of cells being dedicated to particular frequencies. Also consider the inner cells, and their ability to attenuate the tectorial membrane’s movement. They function as a frequency‑specific compressor — in other words, a multi‑band compressor!

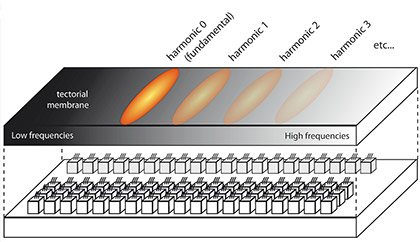

The tectorial membrane’s decreasing stiffness towards its end serves another important purpose, which is frequency tracking. A particular audio frequency will set the membrane in motion at a particular position, and that vibration will be sensed by a specific set of outer cells. A comparatively lower frequency will set the membrane in motion closer to the center of the ‘snail’, and that vibration will be sensed by another set of outer cells. The brain, by analyzing which set of outer cells was put in motion, will then be able to tell that the second frequency was the lower one. Notice how, during this process, the tectorial membrane really acts in the manner of a filter bank, performing an actual spectral analysis of the input signal. Figure 4 illustrates the rough position of a few key frequencies on the cochlea.

Harmonic sounds come as a set of regularly spaced pure tones: if the fundamental frequency is 100Hz, the harmonic frequencies will be 200 Hz, 300 Hz, 400 Hz and so on. As shown in Figure 5, each one of those frequencies will correspond to a particular area of the tectorial membrane. Suppose a given harmonic sound comes with its fundamental frequency plus nine harmonics. In this case, no fewer than 10 distinct areas of the tectorial membrane will be set in vibration. This provides an abundance of coherent information to the brain, which will have no difficulty in quickly and easily finding the right pitch. This is what makes the human ear so powerful for pitch identification.

With the hair cells, we come to the end of the audio path inside the ear. Hair cells are neurons, and the purpose of the outer hair cells is to convert the mechanical vibrations that come from their cilia into nerve signals. Such signals are binary (all or nothing), and seem to be completely decorrelated from the analogue signals to which they correspond. In other words, they’re digital signals, and the inner hair cells are analogue‑to‑digital converters.

What’s It All For?

There is no denying that the ear is quite a complicated device, with a lot of processing built in. We’ve met no fewer than four EQs and three compressors. Are those really necessary? And what can be their purpose?

Let’s begin with the built‑in compressors. They’re not always active. In a quiet ambience, none of them is functioning. The louder the audio signal that gets to the ear, the more the compressors do to attenuate the audio signal that gets to the outer hair cells. This enables the human ear to withstand a dynamic range of roughly 140 dB, which corresponds to a ratio of 100,000,000,000,000:1 between the loudest and the quietest sounds we can perceive. Considering we’re dealing with three tiny mechanical compressors, that’s no mean feat.

Let’s begin with the built‑in compressors. They’re not always active. In a quiet ambience, none of them is functioning. The louder the audio signal that gets to the ear, the more the compressors do to attenuate the audio signal that gets to the outer hair cells. This enables the human ear to withstand a dynamic range of roughly 140 dB, which corresponds to a ratio of 100,000,000,000,000:1 between the loudest and the quietest sounds we can perceive. Considering we’re dealing with three tiny mechanical compressors, that’s no mean feat.

What About The Bass?

Naturally enough, the way the ear works has huge consequences for how we perceive music — and for how we make it. Take the EQs inside the ear — we’ve seen that they privilege the frequencies around 0.5‑4 kHz. Those three octaves contain the frequencies we are most sensitive to. And, naturally enough, they are the ones most favored by musicians and composers, consciously or not. Think about it— how many concertos for violin have been written, and how many for double bass? How many for trumpet, and how many for tuba?

In more modern applications, consider the kick drum in a mix. If you want it to be heard, you have to boost the medium‑high frequencies that are present in the attack. The same goes for the electric bass — if you want it to be completely drowned inside a mix, remove the medium‑high frequencies! It’s as simple as that. To make bass instruments clear and audible, you need to make sure the mid-range frequencies are in place. Low frequencies themselves are clearly useful — they can convey roundness, body, strength, and so on — but any part of the musical language linked to understanding, anything that requires sensitivity, needs to be inside or near the 0.5‑4 kHz range.

Before the advent of the studio, this 0.5‑4 kHz constraint was not so much of an issue, because most instruments operate within this frequency range anyway. With electroacoustic music — and that includes many kinds of pop music nowadays — one must be more careful. The 0.5‑4 kHz range should contain the most important part of the message. To carry the essence of your music, forget about those bass frequencies, your priority is elsewhere.

The same naturally applies to the very high frequencies. Don’t try to convey your musical message using the 4‑20 kHz range; it’s useless. (This, admittedly, is so obvious that only a handful of unreasonable avant garde composers with a strong tendency to sadism have ever been tempted to write and produce music based mainly on such frequencies.)

The ‘Ghost Fundamental’

Looking back at Figure 5, one observation jumps to mind. Together, the harmonics include more information about the pitch of a sound than does the fundamental frequency. There can be as many as 16 audible harmonics, setting 16 zones of the tectorial membrane in motion, whereas the fundamental tone only puts one zone in motion. Not only are the harmonics important, so is the spacing between the harmonics, which is constant, and repeated up to 15 times.

Just think how it’s so much easier to identify pitch from a ‘normal’ instrumental sound with harmonics than from a pure sine wave. In fact, the fundamental frequency is so unimportant as far as pitch is concerned that it can simply be removed without any impact on pitch. This experiment was first performed by Pierre Schaeffer during the 1950s.

This knowledge is very relevant to music production. Let’s say that you’re writing an arrangement for a song and you find yourself fighting frequency overlap in the 100‑1000 Hz range. You’ve got a sampled brass section that gets in the way of the lead vocals. A straightforward solution would be to equalize the brass section to attenuate the frequencies that neighbor those of the lead vocals. This will probably work, but there is a more radical solution — just remove the fundamentals from the brass samples. The pitch will remain the same, and the frequency overlap problem will be greatly reduced. True, it will alter the brass section timbre, but so would an EQ.

Conversely, it’s perfectly possible to ‘design’ whole instrumental sections that appear to be pitched between 100 and 200 Hz, whereas in truth this frequency range remains unused. This leaves more room for other instruments, which in turn means more freedom, richer arrangements, and easier mixes.

How Loud Should You Mix?

Let’s recap and list the ear’s built-in EQs and compressors:

External auditory canal: EQ.

Eardrum: EQ + compressor.

Ossicles: EQ + compressor.

Cochlea: EQ + compressor.

In three cases out of four, dynamic and frequency processing are closely linked, with a fundamental consequence: the timbre of the sound we hear depends on its intensity. In other words, the ear applies a different EQ to incoming audio signals depending on how loud they are. This behavior is very different from that of a studio EQ, which applies the same frequency gains regardless of input signal level.

Now, this is quite natural. Remember that most transducers in the ear are mechanical. They’re physical objects that are set into vibration. Obviously, you can’t expect a vibrating object to react identically when it’s slightly shivering and when it’s moving like crazy. This is particularly true of a complex lever system such as the ossicles. It’s only common sense that the resonant frequencies will differ. This means that you just can’t expect the ear’s EQs to apply the same gain whatever the input level — and they don’t.

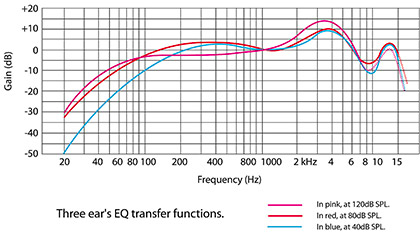

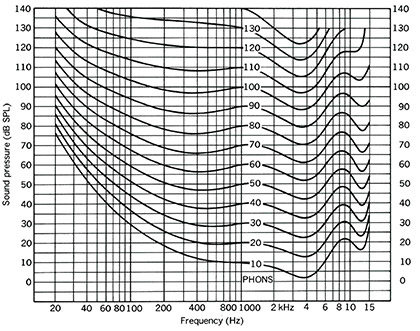

When representing the ear’s EQ transfer functions, we have to do so for a set of given input levels. See Figure 6 for three transfer functions at different levels. We’ve assumed, for this graph, that the EQ’s gain is always 0 dB at 1000 Hz. Those transfer functions are derived from what’s called the equal loudness curves, shown in Figure 7. They’re far more common, but a bit obscure, and not quite related to the concept of EQ.

Obviously, the ear’s EQ transfer functions are of enormous importance when it comes to mixing. Looking again at Figure 5, what can we read? Consider two tones, one at 60 Hz and one at 400 Hz, that seem to be equally loud. Reduce the monitoring level by 40 dB, and the 60 Hz tone will now sound 15 dB quieter than the 1000 Hz one. To be convinced, listen to a kick drum sample very quietly — you don’t hear any bass frequencies. Turn up the volume considerably, and suddenly the same kick drum can be heard with plenty of bass frequencies. This brings an important consequence. Imagine you’re in the middle of a mix and you’re listening to it at quite a high level. Everything is going well, your sound is round and powerful. Now reduce the monitoring volume. Hey presto, no more bass frequencies. Your mix is shallow and anything but powerful. So are you sure you want to mix that loud? Naturally, the same principle applies when you’re actually writing the music. If you don’t want to be disappointed afterwards, turn the volume down. High monitoring levels are for the consumer, not the producer. And, by the way, notice how this phenomenon entirely confirms what I was explaining above — forget about those bass frequencies, really. They’re just not reliable.

The choice of a given monitoring level is not trivial. Not only does cranking up the volume change the frequency balance of a mix, but it also changes the pitch. You don’t believe it? Try the following experiment. Take a pair of good‑quality headphones, such as Beyerdynamic DT 770s or Sennheiser K271s. Use them to listen to some music at high level, then remove the headphones from your head and place them 10 centimeters in front of your face. You will hear all pitches of the song going down noticeably, by up to half a tone. This effect is more obvious with certain tracks than others; try it with the chorus from the song ‘Circus’ by Britney Spears.

Now that we know how the ear works, we can suggest an explanation for this phenomenon. When we listen to loud music, all the compressors in the ear swing into action, including tensor tympani and stapedian muscles. The tensor tympani tightens the eardrum, and the stapedian muscle stiffens the ossicles. As we’ve seen before, when a vibrating object gets more tense, it resonates at a higher pitch. The same applies for the eardrum and ossicles. As a consequence, when audio input gets considerably louder, the whole mechanical part of the ear will resonate at higher frequencies. This prompts a global stimulation offset of the tectorial membrane towards higher frequencies, and confuses the brain into thinking it’s listening to transposed musical content.

Conclusion

As we’ve seen throughout this article, the ear is a very complicated device, and hearing is a delicate and complex phenomenon. Even if we restrict ourselves to monaural audition, the sense of hearing seems to be at the origin of many illusions. Perceptual aspects that we generally think of as independent, such as timbre, volume and pitch, are in fact interrelated. The fundamental frequency of a harmonic sound, the very one we use to define pitch, is actually of no importance at all as far as our perception of pitch is concerned.

This should put into perspective the common saying that the ears are the ultimate judge in music production. To some extent they certainly are, but as we are now aware, they can also be fooled extremely easily. The only reasonable suggestion would be prudence. When designing sounds, when mixing, in music and/or ambience production in general, when you want to evaluate what you’re doing, take your time. Change your monitoring level, walk to the other end of the studio, listen to your work on other speakers — anything that can stop you fooling yourself. And don’t hesitate to use meters, be they loudness meters or spectrograms. True, they show reductive information that is not necessarily related to what we hear, but they cannot be tricked.

When writing or producing music, the ease with which the ear is confused can also work to your advantage. Knowing how the ear works means that you have a head start when it comes to shaping the message you want your listeners to receive!

— Emmanuel Deruty (Sound on Sound)

Read More

Studio Acoustics & Soundproofing Basics

The science of acoustics is something that tends to alternately baffle and intimidate most of us. Outside of a handful of highly trained individuals...

Top 5 Reasons Mic Preamps Matter

With dozens, if not hundreds of different brands, models, shapes, sizes, variations, and configurations to choose from, it’s no wonder mic preamps are among the most misunderstood pieces of the audio signal chain.

Tips for Prepping Your Mixing Session

Here's some preparation that needs to be done before you start your mixing session.